请注意,本文编写于 153 天前,最后修改于 152 天前,其中某些信息可能已经过时。

目录

Centos7下使用kubeadm方式部署K8S

1)安装前准备:

- 集群中所有机器网络需要互通。(内存≥2G,cpu ≥2,硬盘≥30G)

- 所有设备都需要访问互联网,因为要联网下载镜像

- 禁止swap分区

对系统进行初始化操作

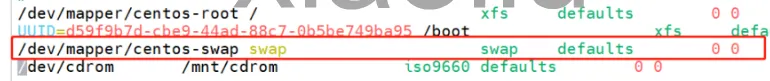

#关闭防火墙 systemctl stop firewalld systemctl disable firewalld #关闭selinux setenforce 0 sed -i 's/SELINUX=.*/SELINUX=disabled/' /etc/selinux/config #关闭swap #临时关闭swap,立即生效 swapoff -a #永久关闭swap #编辑/etc/fstab文件,删除或注释掉关于swap的行,然后重启系统即可生效 sed -i '/\/dev\/mapper\/centos-swap.* swap/ s/^/#/' /etc/fstab

在master节点中添加hosts

#根据自己设置的主机名来绑定 cat >> /etc/hosts <<EOF 10.10.10.11 k8s-1 10.10.10.12 k8s-2 10.10.10.13 k8s-3 EOF

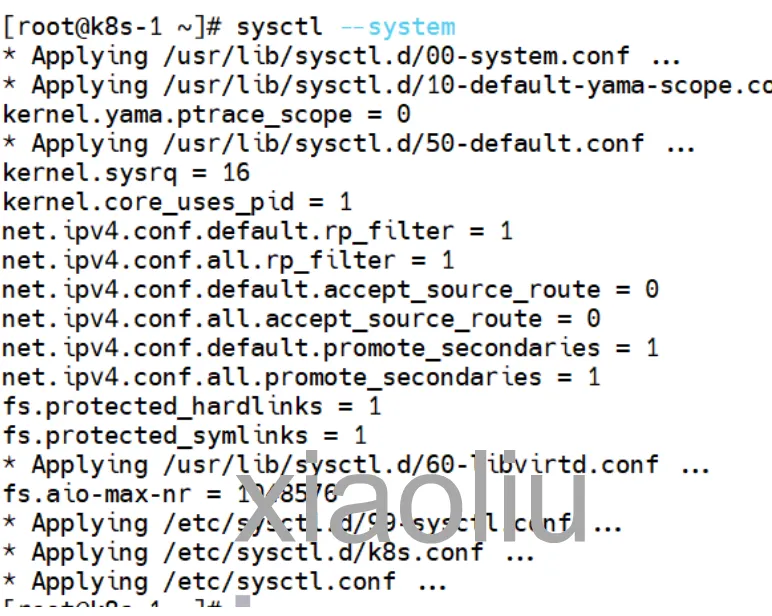

将桥接的IPV4流量传递到iptables链中(所有设备中都要执行)

cat > /etc/sysctl.d/k8s.conf << EOF net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 net.ipv4.ip_forward = 1 EOF sysctl --system

安装ntp服务同步时间

#centos7 yum install ntpdate -y #同步时间 ntp.aliyun.com #阿里云ntp服务器 ntp.ntsc.ac.cn #中国科学院国家授时中心NTP #同步时间 ntpdate ntp.ntsc.ac.cn

2)为所有设备安装Docker

yum install -y yum-utils yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo yum install docker-ce docker-ce-cli containerd.io docker-buildx-plugin docker-compose-plugin -y systemctl start docker && systemctl enable docker

修改docker驱动为systemd

cat <<EOF > /etc/docker/daemon.json { "exec-opts": ["native.cgroupdriver=systemd"] } EOF

3)安装kubeadm,kubelet和kubectl

这里使用中科大源进行安装,我这里安装的版本为v1.28.1,根据自己需要自行修改版本号。

阿里云源地址:https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

cat <<EOF > /etc/yum.repos.d/kubernetes.repo [k8s] name=K8s baseurl=http://mirrors.ustc.edu.cn/kubernetes/yum/repos/kubernetes-el7-x86_64/ enabled=1 gpgcheck=0 EOF yum install -y kubelet-1.28.1 kubeadm-1.28.1 kubectl-1.28.1

4)初始化k8s

查看当前版本所需要拉取的镜像

kubeadm config images list

配置节点互相信任,可以免密连接

ssh-keygen -t rsa -C "admin@123" #将rsa文件拷贝到其他节点 ssh-copy-id 10.10.10.12

下载k8s所需要的镜像到本地

#!/bin/bash img=( kube-apiserver:v1.28.1 kube-controller-manager:v1.28.1 kube-scheduler:v1.28.1 kube-proxy:v1.28.1 coredns:v1.10.1 etcd:3.5.9-0 pause:3.6 ) for i in ${img[@]};do docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/$i if [ "$i" == "coredns:v1.10.1" ];then docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/$i registry.k8s.io/coredns/$i else docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/$i registry.k8s.io/$i fi docker rmi registry.cn-hangzhou.aliyuncs.com/google_containers/$i done

初始化集群Master节点

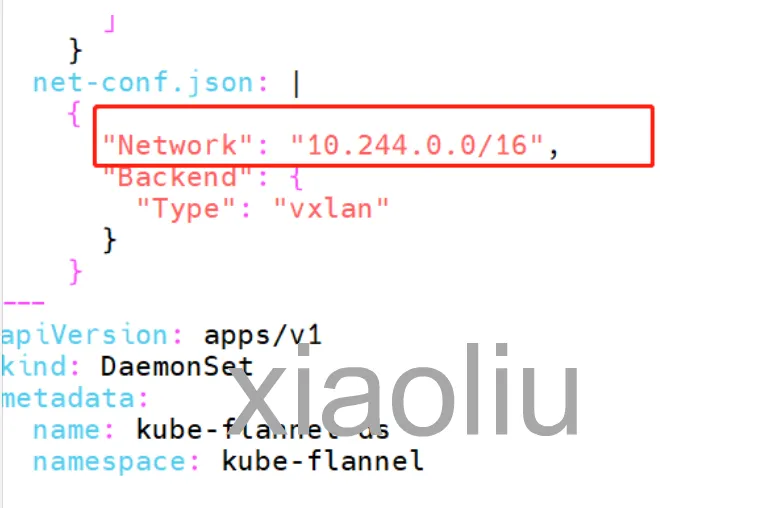

kubeadm init --apiserver-advertise-address=0.0.0.0 --image-repository registry.aliyuncs.com/google_containers --kubernetes-version=v1.28.1 --service-cidr=10.96.0.0/12 --pod-network-cidr=10.244.0.0/16

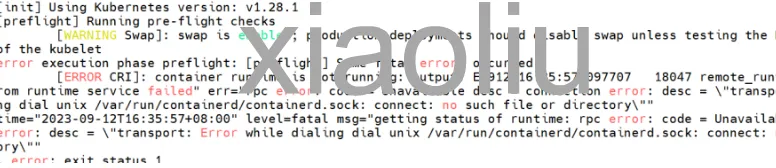

如果第一次运行出现如下错误

#解决方法 mv /etc/containerd/config.toml /etc/containerd/config.toml.bak systemctl restart containred

修改后再次尝试初始化集群

#由于前面初始化失败需要先重置集群,再进行初始化 kubeadm reset #初始化master kubeadm init --apiserver-advertise-address=0.0.0.0 --image-repository registry.aliyuncs.com/google_containers --kubernetes-version=v1.28.1 --service-cidr=10.96.0.0/12 --pod-network-cidr=10.244.0.0/16

依然报错,显示操作超时

解决方法:由于1.28版本及以上使用containerd代替Docker,所以需要将pause镜像导入到containerd里

docker save registry.k8s.io/pause:3.6 -o pause.tar ctr -n k8s.io images import pause.tar #注:默认会从k8s.io仓库内寻找所需镜像,仓库名不可修改,否则会初始化失败。 systemctl daemon-reload systemctl restart kubelet

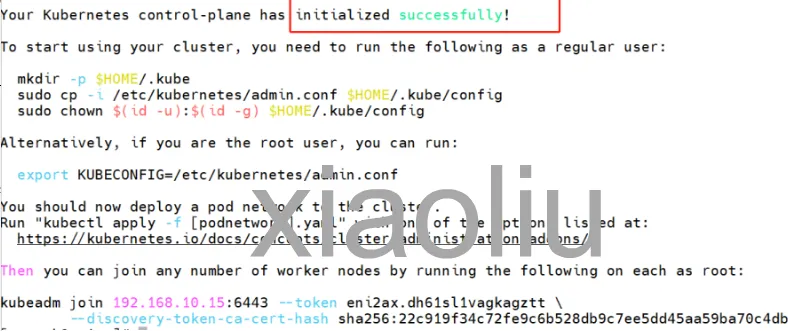

再次初始化Master节点,终于成功!!!!

#由于前面初始化失败需要先重置集群,再进行初始化 kubeadm reset rm -rf $HOME/.kube/config rm -rf /var/lib/etcd #再次初始化master节点 kubeadm init --apiserver-advertise-address=0.0.0.0 --image-repository registry.aliyuncs.com/google_containers --kubernetes-version=v1.28.1 --service-cidr=10.96.0.0/12 --pod-network-cidr=10.244.0.0/16

根据提示执行以下命令

根据提示执行以下命令

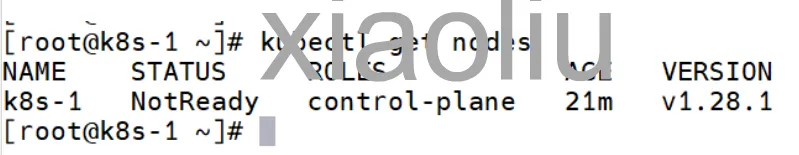

mkdir -p $HOME/.kube #不是root用户执行以下两行需要加sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config chown $(id -u):$(id -g) $HOME/.kube/config #查看当前节点 kubectl get nodes

将节点加入集群

将节点加入集群

#复制自己安装成功后显示的token,不要直接粘贴我这里的否则会报错 #在其他node节点中执行,不要在master设备中执行以下语句 kubeadm join 192.168.10.15:6443 --token eni2ax.dh61sl1vagkagztt \ --discovery-token-ca-cert-hash sha256:22c919f34c72fe9c6b528db9c7ee5dd45aa59ba70c4dbf9218bfe0f722de52a1

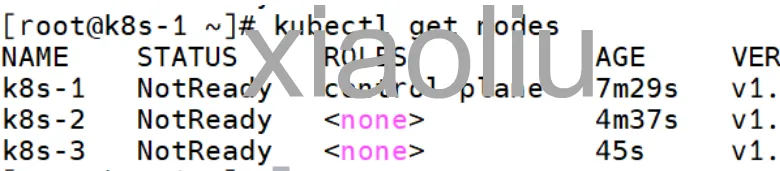

查看新加入的节点

#查看节点 kubectl get nodes #查看运行状态 kubectl get pod -n kube-system

注:默认token的有效期为24小时,过期后就不能用了。这时就需要重新生成token

注:默认token的有效期为24小时,过期后就不能用了。这时就需要重新生成token

kubeadm token create --print-join-command

安装网络插件

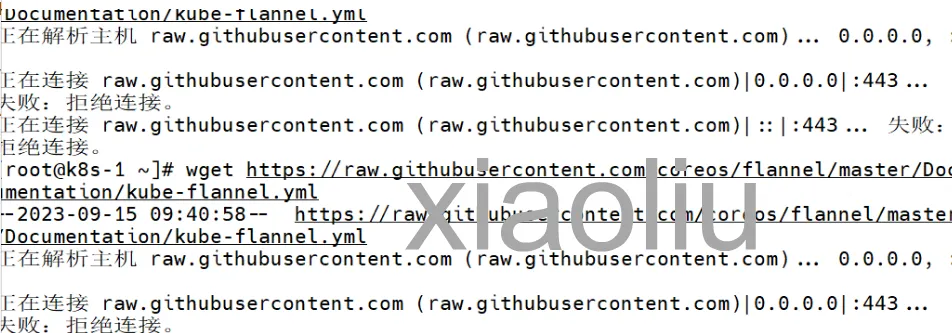

wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

如果在虚拟中显示下载失败,可以在本地打开网页复制到文件中也可以直接复制下方内容保存到文件中即可。

如果在虚拟中显示下载失败,可以在本地打开网页复制到文件中也可以直接复制下方内容保存到文件中即可。

--- kind: Namespace apiVersion: v1 metadata: name: kube-flannel labels: k8s-app: flannel pod-security.kubernetes.io/enforce: privileged --- kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1 metadata: labels: k8s-app: flannel name: flannel rules: - apiGroups: - "" resources: - pods verbs: - get - apiGroups: - "" resources: - nodes verbs: - get - list - watch - apiGroups: - "" resources: - nodes/status verbs: - patch - apiGroups: - networking.k8s.io resources: - clustercidrs verbs: - list - watch --- kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: labels: k8s-app: flannel name: flannel roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: flannel subjects: - kind: ServiceAccount name: flannel namespace: kube-flannel --- apiVersion: v1 kind: ServiceAccount metadata: labels: k8s-app: flannel name: flannel namespace: kube-flannel --- kind: ConfigMap apiVersion: v1 metadata: name: kube-flannel-cfg namespace: kube-flannel labels: tier: node k8s-app: flannel app: flannel data: cni-conf.json: | { "name": "cbr0", "cniVersion": "0.3.1", "plugins": [ { "type": "flannel", "delegate": { "hairpinMode": true, "isDefaultGateway": true } }, { "type": "portmap", "capabilities": { "portMappings": true } } ] } net-conf.json: | { "Network": "10.244.0.0/16", "Backend": { "Type": "vxlan" } } --- apiVersion: apps/v1 kind: DaemonSet metadata: name: kube-flannel-ds namespace: kube-flannel labels: tier: node app: flannel k8s-app: flannel spec: selector: matchLabels: app: flannel template: metadata: labels: tier: node app: flannel spec: affinity: nodeAffinity: requiredDuringSchedulingIgnoredDuringExecution: nodeSelectorTerms: - matchExpressions: - key: kubernetes.io/os operator: In values: - linux hostNetwork: true priorityClassName: system-node-critical tolerations: - operator: Exists effect: NoSchedule serviceAccountName: flannel initContainers: - name: install-cni-plugin image: docker.io/flannel/flannel-cni-plugin:v1.2.0 command: - cp args: - -f - /flannel - /opt/cni/bin/flannel volumeMounts: - name: cni-plugin mountPath: /opt/cni/bin - name: install-cni image: docker.io/flannel/flannel:v0.22.2 command: - cp args: - -f - /etc/kube-flannel/cni-conf.json - /etc/cni/net.d/10-flannel.conflist volumeMounts: - name: cni mountPath: /etc/cni/net.d - name: flannel-cfg mountPath: /etc/kube-flannel/ containers: - name: kube-flannel image: docker.io/flannel/flannel:v0.22.2 command: - /opt/bin/flanneld args: - --ip-masq - --kube-subnet-mgr resources: requests: cpu: "100m" memory: "50Mi" securityContext: privileged: false capabilities: add: ["NET_ADMIN", "NET_RAW"] env: - name: POD_NAME valueFrom: fieldRef: fieldPath: metadata.name - name: POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace - name: EVENT_QUEUE_DEPTH value: "5000" volumeMounts: - name: run mountPath: /run/flannel - name: flannel-cfg mountPath: /etc/kube-flannel/ - name: xtables-lock mountPath: /run/xtables.lock volumes: - name: run hostPath: path: /run/flannel - name: cni-plugin hostPath: path: /opt/cni/bin - name: cni hostPath: path: /etc/cni/net.d - name: flannel-cfg configMap: name: kube-flannel-cfg - name: xtables-lock hostPath: path: /run/xtables.lock type: FileOrCreate

注:如果你初始化的pod网络信息与文件中的不一致则需要进行手动修改,否则会报错

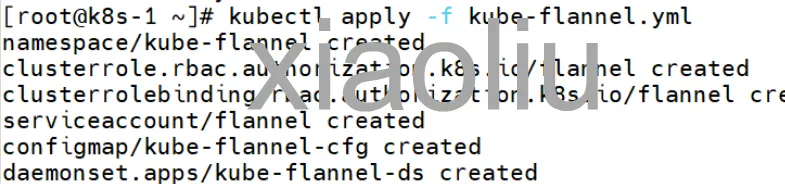

安装网络插件

安装网络插件

kubectl apply -f kube-flannel.yml

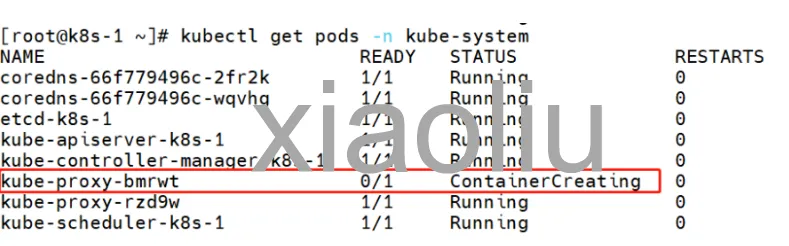

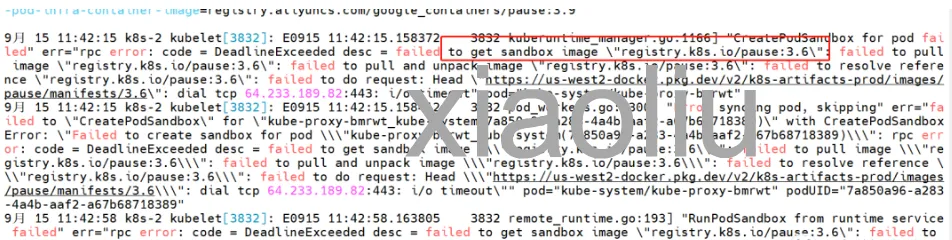

安装后发现添加的node节点依然不能运行,在node节点上查看日志发现pause镜像需要使用3.6版本,在node节点中拉取镜像

在node节点中拉取pause3.6版本镜像,并导入到 containerd的镜像仓库中

在node节点中拉取pause3.6版本镜像,并导入到 containerd的镜像仓库中

#从阿里云仓库拉取镜像 docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.6 #给镜像重新打上标签,使其符合k8s的默认命名规则 docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.6 registry.k8s.io/pause:3.6 #将指定镜像保存为tar文件 docker save registry.k8s.io/pause:3.6 -o pause.tar ctr -n k8s.io images import pause.tar #把镜像导入到k8s.io仓库中 systemctl daemon-reload #重新加载配置文件 systemctl restart kubelet #重启k8s服务

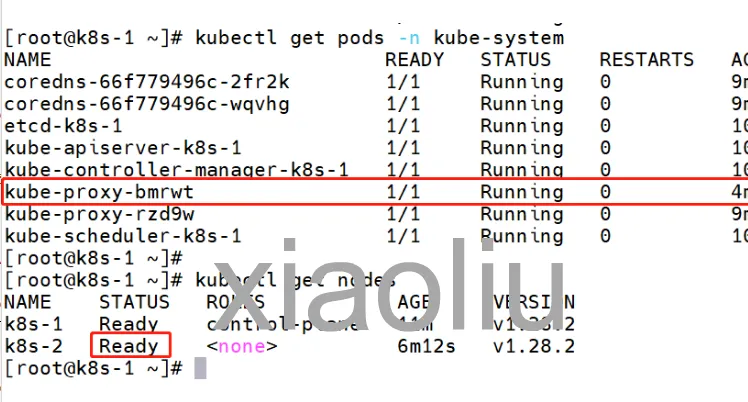

再次查看节点状态,发现已经正常运行了

测试集群状态

在集群中创建一个pod,验证集群运行状态

测试集群状态

在集群中创建一个pod,验证集群运行状态

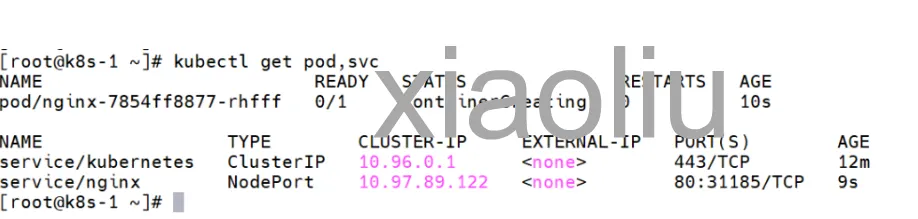

kubectl create deployment nginx --image=nginx kubectl expose deployment nginx --port=80 --type=NodePort kubectl get pod,svc

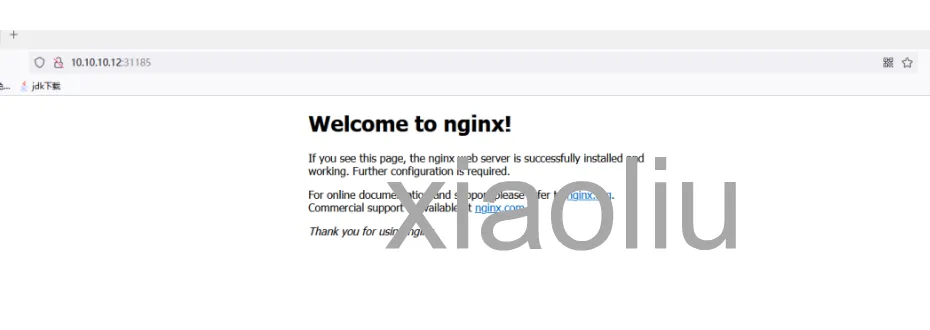

访问node节点,可以正常打开。到这里,k8s集群就配置成功了

访问node节点,可以正常打开。到这里,k8s集群就配置成功了

如果对你有用的话,可以打赏哦

打赏

目录