初始化

所有的机器都要执行

## dns

echo "nameserver 223.5.5.5" > /etc/resolv.conf

## kk部署依赖

yum update -y

yum install conntrack socat curl socat conntrack ebtables ipset ipvsadm chrony -y

## 服务器时区

timedatectl set-timezone Asia/Shanghai

## 时间同步

sed -i 's/^pool pool.*/pool cn.pool.ntp.org iburst/g' /etc/chrony.conf && systemctl enable chronyd --now && chronyc sourcestats -v

## 关闭系统防火墙

systemctl stop firewalld && systemctl disable firewalld

## 禁止selinux

sed -i 's/^SELINUX=enforcing/SELINUX=disabled/' /etc/selinux/config

# 创建密钥

ssh-keygen -t rsa -b 4096

# master到所有都要免密

ssh-copy-id root@10.0.0.131

常用kk命令

## 删除集群

./kk delete cluster -f config-sample.yaml

## 创建集群

./kk create cluster -f config-sample.yaml

## 根据需求创建文件

./kk create config --name ksp-v131 -f ksp-v131.yaml --with-kubernetes v1.31.0

## 安装ks k8s

./kk create cluster --with-kubernetes v1.22.12 --with-kubesphere v3.4.1

补全kubectl

yum install -y bash-completion

echo 'source <(kubectl completion bash)' >>~/.bashrc

kubectl completion bash >/etc/bash_completion.d/kubectl

安装kk

mkdir ~/kubekey

cd ~/kubekey/

# 选择中文区下载(访问 GitHub 受限时使用)

export KKZONE=cn

curl -sfL https://get-kk.kubesphere.io | sh -

# 查看 KubeKey 支持的 Kubernetes 版本列表

./kk version --show-supported-k8s

kk.sh直接执行也可以的{脚本拉不下来的话,直接复制执行}

#!/bin/sh

# Copyright 2020 The KubeSphere Authors.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

ISLINUX=true

OSTYPE="linux"

if [ "x$(uname)" != "xLinux" ]; then

echo ""

echo 'Warning: Non-Linux operating systems are not supported! After downloading, please copy the tar.gz file to linux.'

ISLINUX=false

fi

# Fetch latest version

if [ "x${VERSION}" = "x" ]; then

VERSION="$(curl -sL https://api.github.com/repos/kubesphere/kubekey/releases |

grep -o 'download/v[0-9]*.[0-9]*.[0-9]*/' |

sort --version-sort |

tail -1 | awk -F'/' '{ print $2}')"

VERSION="${VERSION##*/}"

fi

if [ -z "${ARCH}" ]; then

case "$(uname -m)" in

x86_64)

ARCH=amd64

;;

armv8*)

ARCH=arm64

;;

aarch64*)

ARCH=arm64

;;

*)

echo "${ARCH}, isn't supported"

exit 1

;;

esac

fi

if [ "x${VERSION}" = "x" ]; then

echo "Unable to get latest Kubekey version. Set VERSION env var and re-run. For example: export VERSION=v1.0.0"

echo ""

exit

fi

DOWNLOAD_URL="https://github.com/kubesphere/kubekey/releases/download/${VERSION}/kubekey-${VERSION}-${OSTYPE}-${ARCH}.tar.gz"

if [ "x${KKZONE}" = "xcn" ]; then

DOWNLOAD_URL="https://kubernetes.pek3b.qingstor.com/kubekey/releases/download/${VERSION}/kubekey-${VERSION}-${OSTYPE}-${ARCH}.tar.gz"

fi

echo ""

echo "Downloading kubekey ${VERSION} from ${DOWNLOAD_URL} ..."

echo ""

curl -fsLO "$DOWNLOAD_URL"

if [ $? -ne 0 ]; then

echo ""

echo "Failed to download Kubekey ${VERSION} !"

echo ""

echo "Please verify the version you are trying to download."

echo ""

exit

fi

if [ ${ISLINUX} = true ]; then

filename="kubekey-${VERSION}-${OSTYPE}-${ARCH}.tar.gz"

ret='0'

command -v tar >/dev/null 2>&1 || { ret='1'; }

if [ "$ret" -eq 0 ]; then

tar -xzf "${filename}"

else

echo "Kubekey ${VERSION} Download Complete!"

echo ""

echo "Try to unpack the ${filename} failed."

echo "tar: command not found, please unpack the ${filename} manually."

exit

fi

fi

echo ""

echo "Kubekey ${VERSION} Download Complete!"

echo ""

创建配置文件

./kk create config --name ksp-v131 -f ksp-v131.yaml --with-kubernetes v1.31.0

编辑配置文件, vim ksp-v131.yaml,主要修改 kind: Cluster 小节的相关配置,修改说明如下。

- hosts:指定节点的 IP、ssh 用户、ssh 密码、ssh 端口。示例演示了 ssh 端口号的配置方法。

- roleGroups:指定 3个 etcd、control-plane 节点, 3个 worker 节点

- internalLoadbalancer: 启用内置的 HAProxy 负载均衡器

- domain:自定义域名 lb.opsxlab.cn,无特殊需求可使用默认值 lb.kubesphere.local

- clusterName:自定义 opsxlab.cn,无特殊需求可使用默认值 cluster.local

- autoRenewCerts:该参数可以实现证书到期自动续期,默认为 true

- containerManager:使用 containerd

- storage.openebs.basePath:新增配置,指定 openebs 默认存储路径为 /data/openebs/local

单机all

apiVersion: kubekey.kubesphere.io/v1alpha2

kind: Cluster

metadata:

name: sample # 集群的名字

spec:

hosts:

- {name: master, address: 192.168.137.129, internalAddress: 192.168.137.129, user: root, password: "填写该节点的密码"}

- {name: node1, address: 192.168.137.130, internalAddress: 192.168.137.130, user: root, password: "填写该节点的密码"}

roleGroups:

etcd: # etcd 存储集群数据的

- master

control-plane: # 管理集群的

- master

worker: # 具体干活的节点

- master

- node1

controlPlaneEndpoint: # 启动内置的 HaProxy 负载均衡器

## Internal loadbalancer for apiservers

internalLoadbalancer: haproxy # 启动集群内部负载均衡

domain: lb.kubesphere.local # 自定义集群域名,无特殊要求使用默认的即可

address: ""

port: 6443

kubernetes:

version: v1.29.5 # K8s版本

clusterName: cluster.local # 自定义集群名字,无特殊要求使用默认即可

autoRenewCerts: true # 打开后,集群证书到期自动续期

containerManager: docker # 使用docker做容器运行时,也可以换成containerd,看k8s的版本支持哪个运行时就用哪个运行时

etcd:

type: kubekey

network:

plugin: calico

kubePodsCIDR: 10.233.64.0/18

kubeServiceCIDR: 10.233.0.0/18

## multus support. https://github.com/k8snetworkplumbingwg/multus-cni

multusCNI:

enabled: false

storage:

openebs: # 测试环境不装 存储会有问题

basePath: /data/openebs/local # 默认没有的新增配置,base path of the local PV 开发环境用openebs,生产环境使用NFS/Ceph做永久存储 要先创建/data/openebs/local目录

registry:

privateRegistry: "" # 设置私有仓库时用,用于离线安装

namespaceOverride: "" # 给自己搭建的docker拉取平台起个名字

registryMirrors: ["这里填写镜像加速地址"]

insecureRegistries: []

addons: []

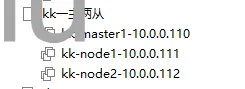

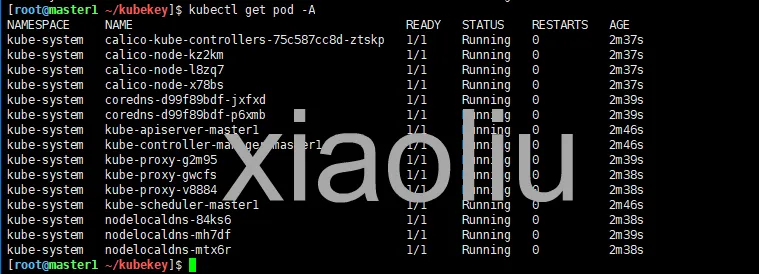

一主双从配置文件

apiVersion: kubekey.kubesphere.io/v1alpha2

kind: Cluster

metadata:

name: ksp-v131

spec:

hosts:

- {name: master1, address: 10.0.0.110, internalAddress: 10.0.0.110, user: root, password: "1"}

- {name: node1, address: 10.0.0.111, internalAddress: 10.0.0.111, user: root, password: "1"}

- {name: node2, address: 10.0.0.112, internalAddress: 10.0.0.112, user: root, password: "1"}

roleGroups:

etcd:

- master1

control-plane:

- master1

worker:

- node1

- node2

controlPlaneEndpoint:

domain: lb.kubesphere.local

address: ""

port: 6443

kubernetes:

version: v1.31.0

clusterName: cluster.local

autoRenewCerts: true

containerManager: containerd

etcd:

type: kubekey

network:

plugin: calico

kubePodsCIDR: 10.233.64.0/18

kubeServiceCIDR: 10.233.0.0/18

multusCNI:

enabled: false

storage:

openebs:

basePath: /data/openebs/local

registry:

privateRegistry: "registry.cn-hangzhou.aliyuncs.com" # 使用阿里云镜像

namespaceOverride: "kubesphereio" # 阿里云镜像 KubeSphere 官方 namespace

registryMirrors: []

insecureRegistries: []

addons: []

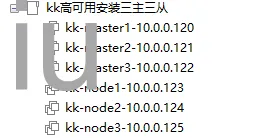

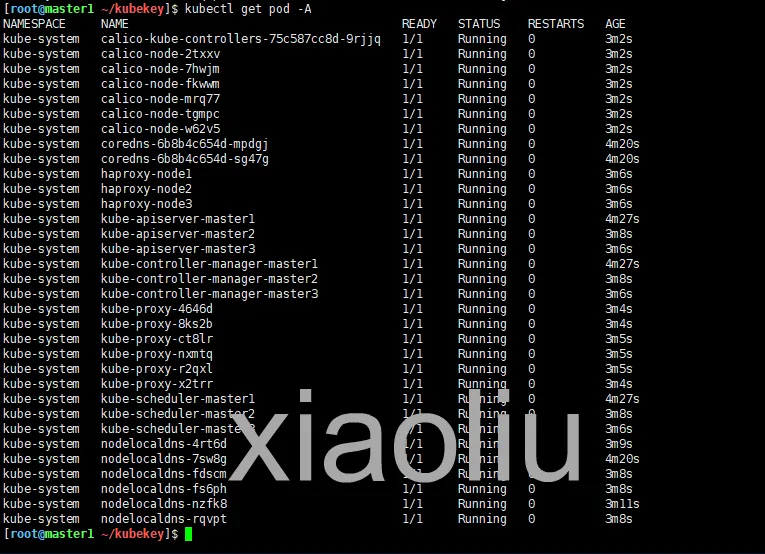

三主三从配置文件

apiVersion: kubekey.kubesphere.io/v1alpha2

kind: Cluster

metadata:

name: ksp-v131

spec:

hosts:

- {name: master1, address: 10.0.0.120, internalAddress: 10.0.0.120, user: root, password: "1"}

- {name: master2, address: 10.0.0.121, internalAddress: 10.0.0.121, user: root, password: "1"}

- {name: master3, address: 10.0.0.122, internalAddress: 10.0.0.122, user: root, password: "1"}

- {name: node1, address: 10.0.0.123, internalAddress: 10.0.0.123, user: root, password: "1"}

- {name: node2, address: 10.0.0.124, internalAddress: 10.0.0.124, user: root, password: "1"}

- {name: node3, address: 10.0.0.125, internalAddress: 10.0.0.125, user: root, password: "1"}

roleGroups:

etcd:

- master1

- master2

- master3

control-plane:

- master1

- master2

- master3

worker:

- node1

- node2

- node3

controlPlaneEndpoint:

## Internal loadbalancer for apiservers

internalLoadbalancer: haproxy

domain: lb.kubesphere.local

address: ""

port: 6443

kubernetes:

version: v1.31.0

clusterName: cluster.local

autoRenewCerts: true

containerManager: containerd

etcd:

type: kubekey

network:

plugin: calico

kubePodsCIDR: 10.233.64.0/18

kubeServiceCIDR: 10.233.0.0/18

multusCNI:

enabled: false

storage:

openebs:

basePath: /data/openebs/local

registry:

privateRegistry: "registry.cn-hangzhou.aliyuncs.com" # 使用阿里云镜像

namespaceOverride: "kubesphereio" # 阿里云镜像 KubeSphere 官方 namespace

registryMirrors: []

insecureRegistries: []

addons: []

KubeSphere v4.1.2

借鉴

创建配置文件

./kk create config --with-kubernetes v1.31.0

注:k8s中可以使用crictl来拉取镜像,ctr默认的配置还是没有改变不能拉取

离线安装

借鉴

https://www.kubesphere.io/zh/docs/v3.4/installing-on-linux/introduction/air-gapped-installation/

https://www.kubesphere.io/zh/blogs/deploying-kubesphere-and-k8s-offline-with-kubekey/

环境

评论区